Whitepaper: Near Misses (Part 1)

It seems that everyone wishes to use near misses to further their cause. As near misses can be valuable opportunities for learning and improvement, this should be good news. The problem is that rational discussion of near misses tends to degenerate into debates about whether they are lagging or leading indicators of safety. But maybe, this can serve to discredit some of the misguided arguments relating to lagging and leading indicators.

Continue Reading

Fill out the form to continue reading

NEAR MISSES

Transforming Potential Incidents into Learning Opportunities

Where lagging and leading indicators meet.

It seems that everyone wishes to use near misses to further their cause. As near misses can be valuable opportunities for learning and improvement, this should be good news. The problem is that rational discussion of near misses tends to degenerate into debates about whether they are lagging or leading indicators of safety. But maybe, this can serve to discredit some of the misguided arguments relating to lagging and leading indicators.

Don’t miss the point.

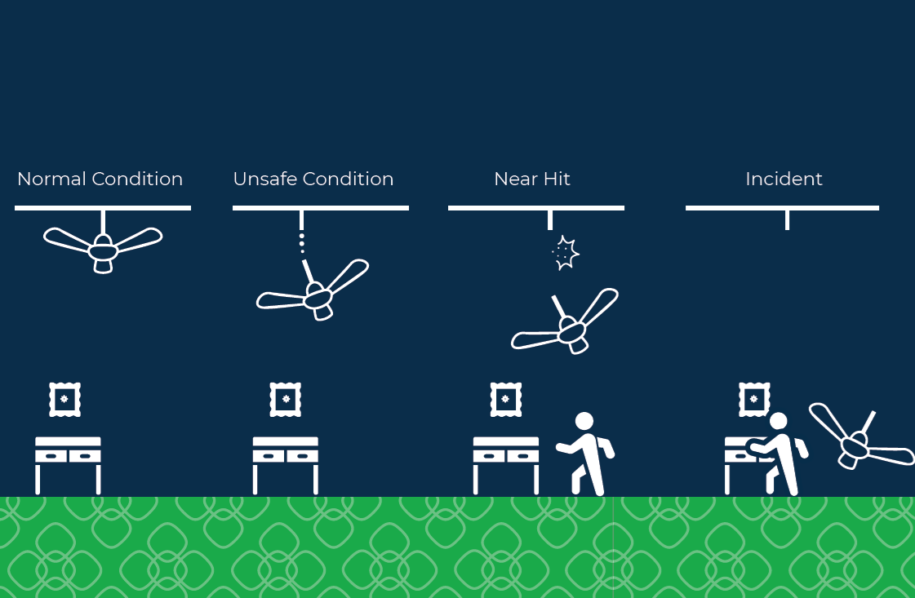

Let’s begin by clarifying what we want to convey—was what took place a near miss or a near hit?

The term “near miss” is conventional and recognizable. Taking some cues from Daniel Kahneman’s book Thinking, Fast and Slow, however, cognitive ease brings to mind that “near miss” may register the opposite of its intent, as safe or comfortable. The phrase “near hit” is less common, but may elicit a stronger emotional reaction, drawing greater attention to the same event. The choice is up to you, but one may serve to get your point across clearer.

Let’s not just pretend this didn’t happen.

Near misses are sometimes thought of as an event without effect, but to the discerning, they offer insight into a potentially more serious occurrence. In this light, a near miss serves as a learning opportunity. This understanding enables action towards preventing repeat occurrences with the possibility of a more disastrous outcome, transforming near misses into leading indicators that can be employed in the creation of a safer environment.

Something worse could have occurred, but something still occurred.

By OSHA’s definition, a near miss describes “an incident in which no property was damaged and no personal injury was sustained, but where, given a slight shift in time or position, damage and/or injury easily could have occurred” (“Near Miss” 1). The inferred meaning within this definition is that, while no actual harm was inflicted, the event that transpired was still undesirable. Here, a near miss is treated as a lagging indicator.

Are conditions safe or unsafe?

There is a clear distinction between a “near miss” and an “unsafe condition.” An unsafe condition exists separately from an incident taking place and is, therefore, a leading indicator. Examples of unsafe conditions would be the presence of corrosion on steel walkways, defective brakes, pressure vessels going uninspected, unworn PPE, faulty electrical grounding, etc. Whereas walking on the walkway when it gave way (but with the result of no injury) would be considered a near miss.

When to act.

When near misses are classified as lagging indicators, the implication is not that it is too late for any sort of preventative action. Deterring that specific event is no longer possible, but as with any incident (not just fatalities,) investigating the source to gain experience in how to handle and inhibit a recurrence is imperative. The lagging indicator created by the incident correlates to a leading indicator for learning and improving.

Same, same but different.

No matter how you classify them, near misses are indicators. When determining between lagging and leading, they can justifiably be defined as either.

Their existence represents the presence of something undesirable, though luck saved the day, and they provide evidence that can be analyzed to reduce the possibility of future injury or damage.

Proactive analysis and making use of the data available for continuous improvement is an important takeaway from near misses. Spending time dogmatically arguing that the implications of lagging are “too late” or leading “too subjective” is a waste of the possible learning opportunity.

How we continuously improve.

Within the realm of safety, constant improvement is accomplished by employing the Plan-Do-Check-Act (PDCA) or Plan-Do-Study-Act (PDSA) methodologies:

PDCA, or the Deming Cycle, has become the foundation of management system standards (e.g., ISO 45001, ANSI Z10, etc.) and is a common tool for generating change for the better.

PDSA, or the Shewhart Cycle, is referred to in Out of the Crisis and The New Economics. In these books, Deming proffers the term “study” over “check” as the former better establishes the concept of attempting to understand the state of things instead of just answering whether or not a condition exists. PDSA can help define a baseline for operational safety and create safety realities from safety precursors.

Completing the cycle.

It may take some time to establish safety measures for work. What is most important is planning for what will enable consistency.

PDSA can be used in both creating and implementing safety precursors. Part of effectively implementing any change is monitoring how consistently the plan is being put to action, how well is it being carried out. Observations from inspections can be used as leading indicators to ensure actions are completed and improvements are effective.

Near miss reporting should also be encouraged, so as not to miss out on the learning potential from this important leading indicator of safety. A resulting increase in near misses is not indicative of a worsening situation but may simply signify greater trust and accountability. Reporting should always be celebrated, not reprimanded. This helps establish that the ultimate desire is to improve and encourage proactivity. Fulfilling a complete PDSA cycle by soliciting feedback and correcting shortcomings will lead to an increased likelihood of successfully achieving your safety precursors.

How safe are your safety efforts?

While choosing indicators wisely will improve overall safety performance, it is important to recognize that even the best intentions are still a matter of judgment. As far as that judgment goes, posing the question “are we safe?” will always result in unmet expectations. “Are we doing what is right for us?” is the plumb line that, with the best-laid plans, can be more positively answered.

Despite carefully chosen indicators and excellent performance, overlooking even one crucial detail can lead to an accident. In such a case, near misses, injuries, and structural damage provide necessary insight into safety system weaknesses (gaps.) This displays that assessments for achieving safety cannot rely exclusively on leading indicators, leading us to our final question: “Was anyone injured?” This last step in the process for reporting safety performance can be answered with the assistance of data from lagging indicators (e.g., injuries and illnesses.) Some common examples are Total Recordable Incident Rate (TRIR,) Lost Time Injuries (LTI,) and Days Away, Restricted, or Transferred (DART.) Still, if the ultimate goal is proactive safety and not simply compliance, the devil is in the details. Getting caught up in comparing numbers detracts from what they represent—the safety of YOUR people.

“You've Got To Ask Yourself One Question: 'Do I Feel Lucky?’” (Dirty Harry)

In the case of little to no injuries being reported, don’t just pat yourself on the back for a job well done. Investigate why this was the case. Is the reporting process being carried out correctly, and is it trustworthy? If injuries have been reported, an investigation is still the next step. Specifics (including the type/severity of the injury, type of work, conditions/location where it took place) need to be found out. In planning for a safer future, knowledge is power. Not in the sense of finding “the one to blame,” but the more thorough your post-incident investigations, the less likely this incident will be repeated.

Additionally, reporting on near misses gives a larger-scale view of what working conditions are actually like. Again, effective near miss reporting should not be altered to an inaccurate volume by either gimmicky safety promotions to reach a target and earn a reward or fear of reprimand for more serious incidents.

To improve leading indicators, draw insights from studying your lagging performance indicators. This will give a holistic approach and help control the safety risks present in your facilities. For example, if soft tissue injuries are frequently occurring, emphasis may need to be placed on material handling or ergonomics. Checklists and subsequent conversations for safety should be revised as needed, establishing leading indicators to measure performance in relevant areas.

Building an operational definition of safety, the PDSA way.

When crafting an operational definition of safety, there are a few things to consider:

- What is your organization’s ideal in terms of safety?

- What methodology will you employ to generate safety improvements?

- What judgment will determine whether you have successfully achieved these first two?

Taking these questions into account helps reveal a balanced approach of integrating both leading and lagging indicators into your operational definition of safety. Continuous improvement is achieved through understanding and conscious improvement efforts. These actions are measured by leading indicators, optimizing a safety system’s performance. Accidents provide data for the benefit of judging how effective the preventive action was. Studying accidents lends insight into what needs to be changed in prevention measures and leading indicators.

Let’s not forget what “near miss” really means.

Having emphasized repeatedly the benefits of near miss reporting in investigatory safety programs, let’s not forget that each incident does represent a failure. In Managing the Unexpected, Karl Weick and Kathleen Sutcliffe determine that a preoccupation with failure is a common element in High-Reliability Organizations (HROs.) Their theory stresses that near misses indicate that a system is not operating optimally. As neglecting to address an incident (or “failure”) could lead to severe consequences, they state that organizations should “Interpret a near miss as danger in the guise of safety rather than safety in the guise of danger” (pg. 152). Comparatively, the common cause hypothesis illustrates the idea that the same path that led to an incident may lead to no injury, a minor injury, or a major injury.

Objects in mirror are closer than they appear.

There is an argument against lagging indicators that using them is like driving a car by only looking in the rear view mirrors. While this does paint an amusing (or terrifying) mental picture, it does not follow logic.

We drive safely by looking forward and using rear view mirrors. While our attention may have to shift from one to the other, we need both to completely take in our surroundings. Using both leading and lagging indicators is part of a “whole picture” safety approach.

That finishing touch.

The result of safety efforts (lagging indicators) and the process involved in making those safety efforts (leading indicators) are both necessary for crafting a continuously improving safety program. Using leading indicators helps determine how precisely daily operations are being carried out. However, our judgment of our safety efforts should be constantly revised by both our successes and failures. Lagging indicators provide important feedback on how accurately “necessary” safety measures have been identified. The final element is full comprehension of the data, so it does not end up manipulated to suit some other agenda.

Letting agendas and arguments (e.g., leading vs. lagging) get in the way will only impede performance. Taking action after near misses is essential to improvement. Ultimately, near misses serve as an indicator of a failure (and opportunity) to meet livesaving standards.

References

- Deming, W.E., 1986, Out of the Crisis. Cambridge, MA: MIT

- Deming, W.E., 1993, The New Economics. Cambridge, MA: MIT

- Dirty Harry. Dir. Don Siegel. Warner Bros. Entertainment Inc., 1971. Film.

- Kahneman, D., 2011, Thinking, Fast and Slow. London: Penguin Books

- Near Miss Incident Report Form. Occupational Safety and Health Administration, U.S. Department of Labor, 2022, https://www.osha.gov/sites/default/files/2021-07/Template%20for%20Near%20Miss%20Report%20Form.pdf. PDF download.

- Weick K.E. & Sutcliffe, K.M., 2007, Managing the Unexpected: Resilient Performance

in an Age of Uncertainty, 2nd Ed. San Francisco, CA: Jossey-Bass.

Your Complete, Cloud-Based Safety Solution

An online, integrated platform to protect your team,

reduce risk, and stay compliant